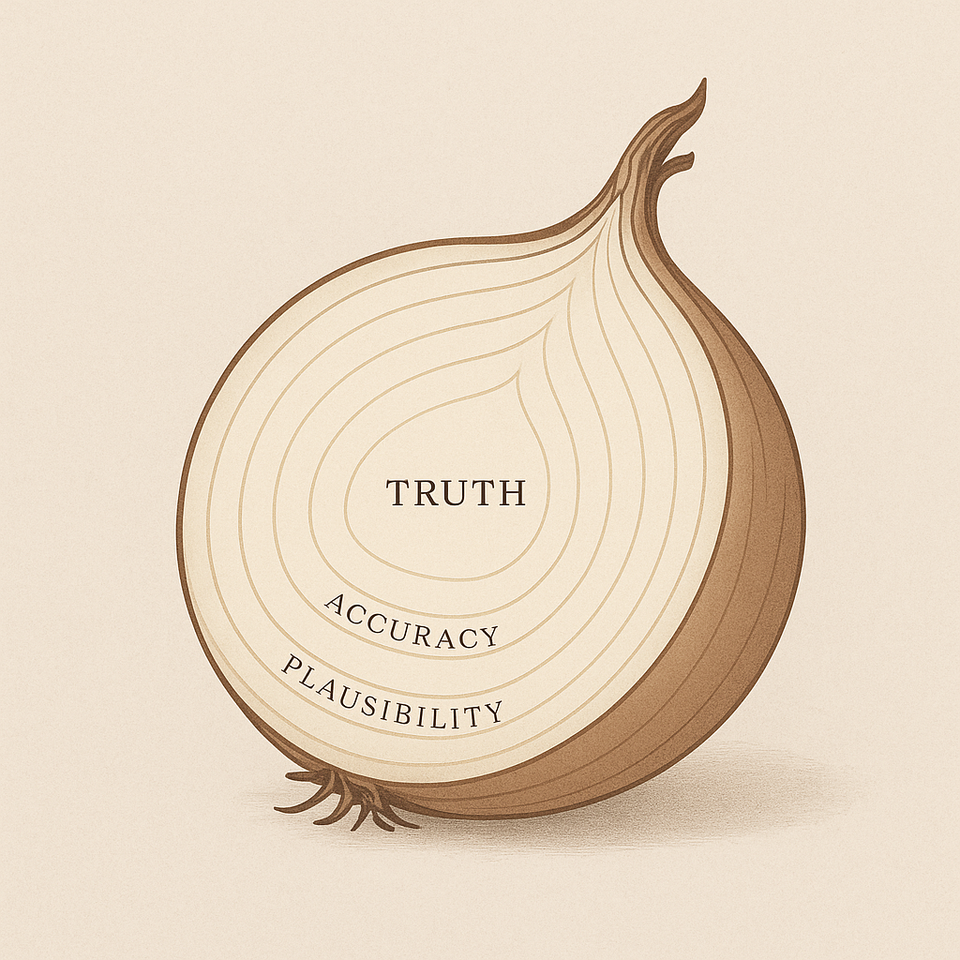

“Onion of Truth” for Generative-AI

Generative AI is transforming our interactions with technology. It's writing essays, answering complex questions, and even helping people explore profound topics. However, understanding how generative AI provides answers is crucial—especially when dealing with deeply personal or religious matters. Trusting generative AI for objective truth requires understanding the "layers of truth," each influencing the reliability of the responses you receive.

| Layer | What it controls | How it can sabotage truth |

|---|---|---|

| 1. Reality itself | The actual, objective facts of the universe | Facts can be nuanced, disputed, or change over time → |

| 2. Source material | What was written in the text the model trained on | Typos, outdated science, propaganda, fringe opinions, synthetic spam |

| 3. Training mix & weighting | Which sources got sampled, how often, and with what filtering | Over-representation or under-representation skews the model’s “beliefs” |

| 4. Model compression | Billions of weights squeeze vast text into finite memory | Nuance is lost; rare facts may be blurred or merged with nearby concepts |

| 5. Token-probability ranking | The raw probabilities the model assigns to each next token | Even with perfect data, optimization noise can make the wrong token top-ranked |

| 6. Decoding strategy (deterministic vs stochastic) | How we turn probabilities into a sequence of words | Greedy/argmax = always the #1 token (deterministic, but can lock in error). Sampling/temperature/top-p = picks among high-prob tokens (stochastic, can rescue or worsen truth) |

| 7. Prompt context | What you actually asked—and how clearly | Missing detail, ambiguous wording, or trick questions mislead the model |

| 8. Hallucination dynamics | Model fills gaps when it has low confidence | Confident fabrication of citations, dates, code, etc.—even if corpus never claimed it |

| 9. Post-processing & guardrails | Any external fact-checking, retrieval, or moderation layers | Weak or absent guardrails let errors pass straight to the user |

| 10. User interpretation | How you read, trust, or cross-check the answer | Misreading nuance, ignoring uncertainty cues, or over-trusting fluency |

The Layers of Truth in Generative AI:

1. Reality Itself

Reality represents objective facts—what truly exists or happened. However, not all truths are universally accepted or easily defined. Some facts are nuanced, disputed, or evolving. Generative AI, unaware of these complexities, may oversimplify or miss these nuances entirely.

2. Source Material

Generative AI learns from massive amounts of text, known as training data. If this data contains incorrect, outdated, or biased information, the model incorporates these inaccuracies as "truths." For instance, religious texts or interpretations can vary significantly, leading to contradictory teachings or misinformation in training datasets.

3. Training Mix and Weighting

Not all training data are treated equally. Some sources are emphasized, while others are underrepresented. If certain religious views are disproportionately represented, AI models might reflect a biased or limited perspective, unintentionally promoting one interpretation as universally true.

4. Model Compression

AI models compress vast amounts of data into mathematical weights. During this process, subtle distinctions between facts, opinions, or nuances are inevitably lost or blurred. This can make complex religious concepts appear oversimplified or inaccurately generalized.

5. Token-Probability Ranking

AI generates answers by assigning probabilities to possible next words or "tokens." Sometimes, even with good data, the statistical probability rankings may prioritize incorrect answers simply due to patterns learned during training. A misrepresented religious fact could consistently rank highly despite being incorrect.

6. Decoding Strategy (Deterministic vs. Stochastic)

AI can either pick the single highest-probability word each time (deterministic) or choose randomly from top options (stochastic). Deterministic models consistently repeat the same mistakes, while stochastic models introduce randomness—occasionally correcting errors but sometimes exacerbating inaccuracies.

7. Prompt Context

The quality and clarity of your question directly affect AI's response accuracy. Ambiguous or poorly phrased prompts can confuse the model, leading to misleading or incorrect answers. With religious questions, vague phrasing can inadvertently invite misinterpretations or broad generalizations.

8. Hallucination Dynamics

AI occasionally "hallucinates," confidently creating information not grounded in its training data. This phenomenon is dangerous for religious contexts, as the AI may confidently assert theological positions, scriptures, or interpretations that simply do not exist.

9. Post-processing and Guardrails

Some generative AI models have safeguards like fact-checking layers or moderation filters. However, religious truth often requires nuanced human interpretation that automated guardrails can't fully capture, making these measures limited in ensuring factual or doctrinal accuracy.

10. User Interpretation

Finally, the responsibility lies partly with the user. Humans tend to equate fluency and confidence with correctness. AI’s polished and authoritative tone might convince users to trust outputs unquestioningly, especially in sensitive areas like religion.

Caution for Religious and Sensitive Queries

Understanding these layers highlights why caution is essential when relying on generative AI for religious truths. Religion inherently involves deeply nuanced interpretations, context-specific meanings, and profound personal beliefs. A generative AI system - operating through patterns, probabilities, and training data - cannot reliably handle this complexity with complete accuracy.

When seeking religious guidance or answers, always supplement AI interactions with trusted human authorities, scholarly sources, and personal introspection. Generative AI should be viewed as an informative tool, not a definitive authority, particularly for questions requiring wisdom, context, and spiritual understanding.